The Big 3 Gaps in Generative AI

I’ve written this blog with the intention of sharing my knowledge. The contents of this blog are my opinions only.

For many, ChatGPT feels both scary and magical. As soon as the public saw the potential of Large Language Models (LLMs) news feeds were flooded with evangelists talking about how ChatGPT could do everything from your homework to making you rich.

However, all the noise and hyperbole around LLMs has made it difficult for non-practitioners of the AI arts to construct a clear mental model of what LLMs are and are not capable of.

Given this, I wrote this short piece to help others understand what LLMs really are, what their biggest limitations are (which I call ‘The Big 3 Gaps in Generative AI’), and how to think about where they should and should not be used.

What is an LLM?

Text-generation LLMs, of which ChatGPT is one, are statistical models under the hood. In particular, they are models designed to guess the next word in a sequence based on a prompt and other words it has generated so far.

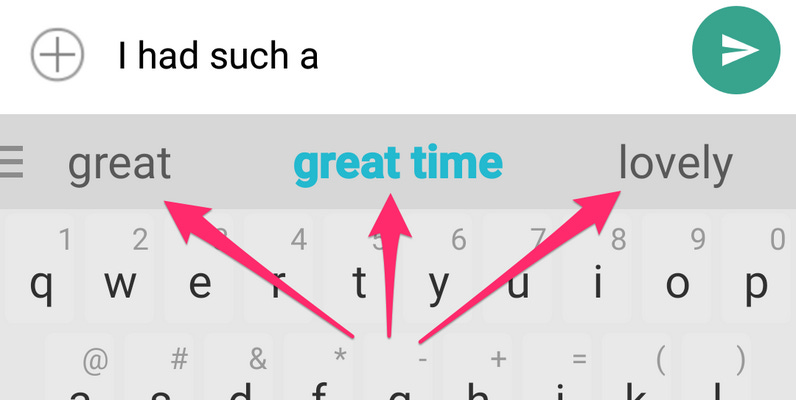

One can think of this as similar to how many smartphones now have a ‘next word guess’ just above the keyboard.

These models are trained effectively by taking a large set of text data (such as the copies of much of the internet provided by Common Crawl) and training a model using really big servers (often NVIDIA A100s or H100s - hence the early 2023 spike in NVIDIA’s share price).

In the training process, model builders try to capture the patterns in training data language using probabilities (e.g. out of all the times we see the word “George” in a sentence, what is the probability that the next word will be “Washington” vs. any other word in the English language).

While there is a lot more to how these models work, this mental model is enough for our purposes. (For those curious about the nuts and bolts, I recommend reading Attention is All You Need (the foundational paper that made the recent AI wave possible). If that feels confusing on its own (which it likely will be) also watch Andrej Karpathy’s ChatGPT Video to break it down a little more.)

The Big 3 Gaps in Generative AI

Armed with this mental model and experience playing with LLMs, 3 major gaps in LLM abilities become evident:

LLMs do not generate results based on what is real or true (they generate responses based on what they have been trained to see in training data)

LLMs are not sentient (and as a result are incapable of determining their own objectives)

LLMs are really, really bad at math

Firstly, regarding their distance from reality. These tools are fundamentally statistical models built to take in one set of words and guess what an output set should look like one word at a time. This means that they are not referring to an authoritative source of truth when they answer a question, nor are they determining the truth by comparing multiple sources and coming to an independent answer. LLMs make authoritative sounding guesses based on the information they have seen on a large subset of the internet, which may or may not be an accurate reflection of reality.

Secondly, regarding their lack of sentience. It is true in a strictly literal sense that LLMs are not sentient as they do not have agency to act without human prompting (and even if LLMs can technically write prompts for other LLMs, the fundamental intent they pursue or seed prompt must be set by a human). However, it is more important to understand this fact from a metaphysical perspective. LLMs are not equipped to make independent determinations of what is right and wrong based on personally held moral or ethical principles the way humans do.

For example, Joe Biden could ask an LLM whether he should provide nuclear weapons to Ukraine, and the LLM could provide an authoritative sounding answer to his question.

However, in a situation like this the US President needs to make an independent determination of what the objectives of the United States are, which of those objectives take priority over others, and what paths are morally acceptable for achieving them.

Humans define these objectives and make moral judgements for decisions like these (as well as plenty of other less-consequential decisions) based on the fundamental beliefs and assumptions they develop from a lifetime of sensory data. There are no universally agreed ‘correct answers’ or assessments in these situations that LLMs can anchor to either, as those can vary wildly depending on those fundamental premises that people looking at the problem hold going into it.

Furthermore, the output of an LLM would vary wildly depending on the way the President phrased the question, rather than being grounded in any universal moral or ethical principles. As a result ChatGPT or any other LLM could not be reasonably relied upon to provide a valid perspective on morally challenging and thorny topics like this.

Finally, regarding their lack of mathematical capabilities. LLMs struggle with math because they are only designed to guess the next word or number in a sequence based on what it has seen before. It is not designed to learn the fundamental meaning of numbers and their patterns. As a result, LLMs are not just bad at arithmetic (which traditional computers are excellent at), they are currently incapable of solving unsolved problems in the pure mathematics space.

It is not impossible that at some point in the future that AI researchers will build new models that overcome these limitations. However, these particular cracks are fundamental to how present-day LLMs have been architected and trained, and are unlikely to be resolved by more training time with more training data on more GPUs. AI systems that solve these problems will require new leaps forward in methodology, and it is anybody’s guess as to when and how that happens.

LLMs Are Digital Intuition

The great contribution of LLMs is that they have given computers an intuitive grasp of natural human language.

Learning the magic tricks behind ChatGPT may make them seem less impressive than they appeared on one’s first interaction. However, these models learn language similar to how we humans do when we are young (we first learn grammar by observing which words are used where, long before we learn the language of nouns, verbs and adjectives), which is no small feat.

That being said, while LLMs have given computers a form of digital intuition, intuition alone is not enough to solve many problems. Understanding and respecting these limits is crucial for allowing these tools to help us, rather than hinder us.

Thank You for Reading!

I hope you found this piece enlightening or helpful in some way.

If you would like to share your thoughts with me about this piece or request different pieces in future, let me know via this email: seeking.brevity@gmail.com